Enhancing linear regression through neighbor-based similarity analysis

Main Article Content

Abstract

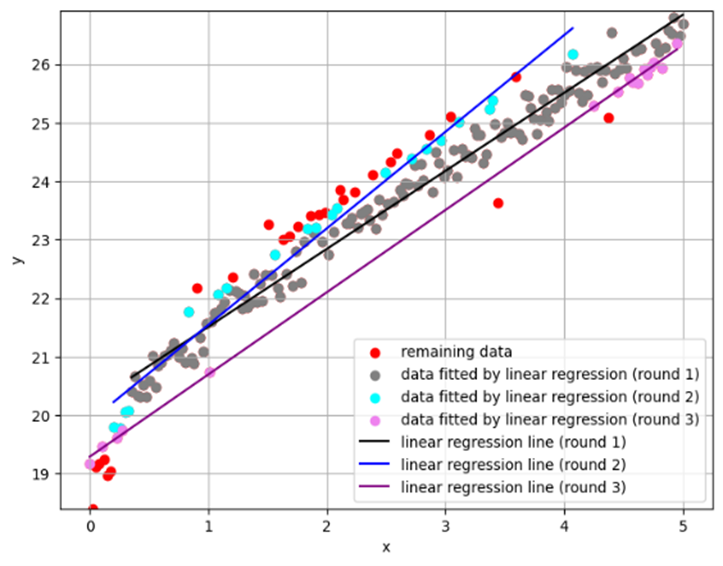

When working with real-world datasets characterized by complex and non-linear relationships, the limitations of non-complex machine learning models like linear regression become evident. In response to addressing this technical problem, we propose a novel algorithm to enhance linear regression without the necessity of complex mathematical or statistical expressions. Instead, the algorithm segments data into multiple subgroups or neighbors, each with its own best-fitting line. The primary objective of this approach is to enable more accurate predictions for unseen data points by utilizing the most similar neighbors and their corresponding linear regression lines, with the support of k-nearest neighbors. Empirical evidence from three publicly available housing price datasets demonstrates the algorithm’s effectiveness in improving traditional linear regression models.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Moore, A.; Bell, M. XGBoost, A novel explainable AI technique, in the prediction of myocardial infarction: A UK Biobank cohort study. Clinical Medicine Insights: Cardiology, 2022, 16, 1-6. https://doi.org/10.1177/11795468221133611

Khan, M. S.; Salsabil, N.; Alam, M. G. R., et al. CNN-XGBoost fusion-based affective state recognition using EEG spectrogram image analysis. Scientific Reports 2002, 12, 14122. https://doi.org/10.1038/s41598-022-18257-x

Moraru, L.; Sistaninejhad, B.; Rasi, H.; Nayeri, P. A Review Paper about Deep Learning for Medical Image Analysis. Computational and Mathematical Methods in Medicine, 2023, https://doi.org/10.1155/2023/7091301

Shorten, C.; Khoshgoftaar, T. M.; Furht, B. Text Data Augmentation for Deep Learning. Journal of Big Data, 2021, 8, 101-135. https://doi.org/10.1186/s40537-021-00492-0

Dodge, J.; Prewitt, T.; Combes, R. T. D., et al. Measuring the carbon intensity of AI in cloud instances. In 2022 ACM Conference on Fairness, Accountability, and Transparency, 2022, 1877-1894. https://doi.org/10.1145/3531146.3533234

Fama, E. F.; French, K. R. The Capital Asset Pricing Model: Theory and Evidence. Journal of Economic Perspectives, 2004, 18(3), 25-46. https://doi.org/10.1257/0895330042162430

Singh, T.; Kalra, R.; Mishra, S., et al. An efficient real-time stock prediction exploiting incremental learning and deep learning. Evolving Systems, 2022, 14, 919-937. https://doi.org/10.1007/s12530-022-09481-x

Walberg, H. J.; Rasher, S. P. Improving Regression Models. Journal of Educational Statistics, 1976, 1, 253-277. https://doi.org/10.2307/1164786

Uddin, M. F.; Lee, J.; Rizvi, S., et al. Proposing Enhanced Feature Engineering and a Selection Model for Machine Learning Processes. Applied Sciences, 2018, 8(4). https://doi.org/10.3390/app8040646

Qiao, Z.; Wang, C. H.; Liu, J. Y. Integrating Feature Engineering with Deep Learning to Conduct Diagnostic and Predictive Analytics for Turbofan Engines. Mathematical Problems in Engineering, 2022. https://doi.org/10.1155/2022/9930176

Deisenroth, M. P.; Faisal, A. A.; Ong, C. S. Mathematics for Machine Learning. Cambridge, UK: Cambridge University Press. 2020.

Arashi, M.; Roozbeh, M.; Hamzah, N. A., et al. Ridge regression and its applications in genetic studies. PLoS ONE, 2021, 16. https://doi.org/10.1371/journal.pone.0245376

Michel, V.; Gramfort, A.; Varoquaux, G., et al. Total Variation Regularization Enhances Regression-Based Brain Activity Prediction. In First Workshop on Brain Decoding: Pattern Recognition Challenges in Neuroimaging, 2021, 9-12. https://doi.org/10.1109/WBD.2010.13

Samavat, A.; Khalili, E.; Ayati, B., et al. Deep Learning Model with Adaptive Regularization for EEG-Based Emotion Recognition Using Temporal and Frequency Features. IEEE Access, 2022, 10, 24520-24527. https://doi.org/10.1109/ACCESS.2022.3155647

Marsland, S. Machine Learning: An Algorithmic Perspective, 2nd ed. New York: Taylor & Francis Group, 2014, 158-160.

Dunn, P. K.; Smyth, G. K. Generalized linear models with examples in R. New York: Springer, 2018. 211-233.

Scikit-learn. (n.d.). Scikit-learn: Machine Learning in Python. Available: https://scikit-learn.org/stable/ [Accessed: 15 Jul 2023].

Kaggle. (n.d.). House Prices: Advanced Regression Techniques. Available: https://www.kaggle.com/competitions/house-prices-advanced-regression-techniques/data [Accessed: 1 Jul 2023].

Kaggle. (n.d.). California Housing Prices. Available: https://www.kaggle.com/datasets/camnugent/california-housing-prices [Accessed: 1 Jul 2023].

Kaggle. (n.d.). Boston House Prices. Available: https://www.kaggle.com/datasets/vikrishnan/boston-house-prices [Accessed: 1 Jul 2023].

Wang, H. Q., & Liang, L. Q. (2022). How Do Housing Prices Affect Residents’ Health? New Evidence From China. Frontiers in Public Health, 9, 816372. https://doi.org/10.3389/fpubh.2021.816372

Mukhlishin, M. F.; Saputra, R.; Wibowo, A., et al. Predicting house sale price using fuzzy logic, Artificial Neural Network and K-Nearest Neighbour. In Proceedings of the 2017 1st International Conference on Informatics and Computational Sciences, 2017, 171-176. https://doi.org/10.1109/ICICOS.2017.8276357

Phan, T. D. Housing Price Prediction Using Machine Learning Algorithms: The Case of Melbourne City, Australia. In Proceedings of the 2018 International Conference on Machine Learning and Data Engineering, 2018. 35-42. https://doi.org/10.3390/land11112100

Truong, Q.; Nguyen, M.; Dang, H., et al. Housing Price Prediction via Improved Machine Learning Techniques. Procedia Computer Science, 2020. 433-442. https://doi.org/10.1016/j.procs.2020.06.111

Gupta, P.; Zhang, Q. Housing Price Prediction Based on Multiple Linear Regression. Scientific Programming, 2021. https://doi.org/10.1155/2021/7678931

Tanamal, R.; Minoque, N.; Wiradinata, T., et al. House Price Prediction Model Using Random Forest in Surabaya City. TEM Journal, 2023. 12, 126-132. https://doi.org/10.18421/TEM121-17

Goodfellow, I. J.; Bengio, Y.; Courville, A. Machine Learning Basics. In Deep Learning. Cambridge: MIT Press, 2016. 96-146.