Enhancing Autonomous Driving: A Novel Approach of Mixed Attack and Physical Defense Strategies

Main Article Content

Abstract

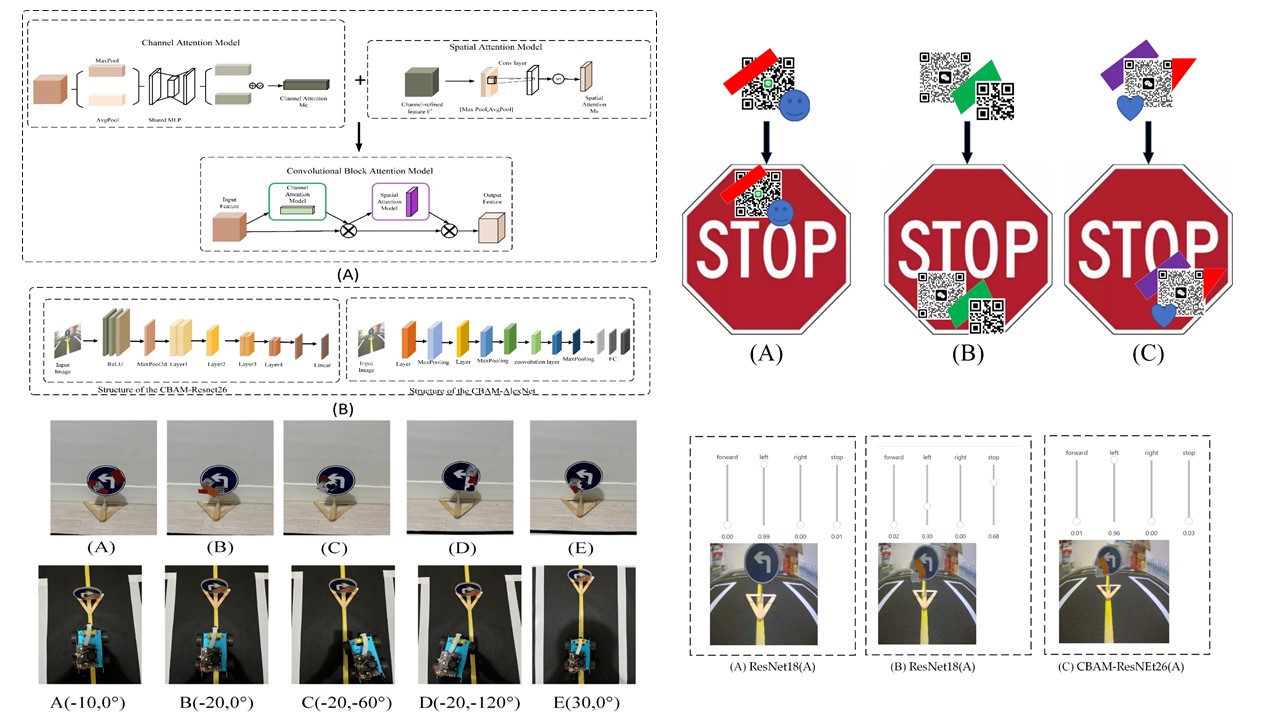

Adversarial attacks are a significant threat to autonomous driving safety, especially in the physical world where there is a prevalence of "sticker-paste" attacks on traffic signs. However, most of these attacks are single-category attacks with little interference effect. This paper builds an autonomous driving platform and conducts extensive experiments on five single-category attacks. Moreover, we proposed a new physical attack - a mixed attack consisting of different single-category physical attacks. The proposed method outperforms existing methods and can reduce the accuracy of traffic sign recognition of an autonomous driving platform by 38%. Furthermore, we proposed a new anti-jamming model for physical adversarial defense, CBAM-ResNet26 & CBAM-Alexnet, which improves an autonomous driving platform's traffic sign recognition accuracy to 63% under mixed attack. Finally, experiments were also conducted with datasets with different ratios of adversarial attack examples, and the experimental results showed that in adversarial training, the higher the ratio of adversarial examples, the higher the recognition accuracy. However, a too high ratio would reduce the accuracy of normal traffic signs. Finally, the optimal ratio for physical adversarial defense training is 1:2.

Article Details

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

References

Akhtar N.; Mian A. Threat of adversarial attacks on deep learning in computer vision: A survey[J]. Ieee Access, 2018, 6, 14410-14430. https://doi.org/10.1109/ACCESS.2018.2807385

Ni, J.; Chen, Y.; Chen, Y.; Zhu, J.; Ali, D.; Cao, W. A survey on theories and applications for self-driving cars based on deep learning methods.AS. 2020, 10(8), 2749. https://doi.org/10.3390/app10082749. https://doi.org/10.3390/app10082749

Li, Y.; Qu, J. Intelligent road tracking and real-time acceleration-deceleration for autonomous driving using modified convolutional neural networks. CAST. 2022, 22(6), 10.55003-10.55003 (26 pages). https://doi.org/10.55003/cast.2022.06.22.013

Bai, T.; Luo, J.; Zhao, J. Inconspicuous adversarial patches for fooling image-recognition systems on mobile devices. IEEE Internet of Things Journal. 2021, 9(12), 9515-9524. https://doi.org/10.1109/JIOT.2021.3124815

Ding, S.; Qu, J. Research on Multi-tasking Smart Cars Based on Autonomous Driving Systems.SN. 2023, 4(3), 292. https://doi.org/10.1007/s42979-023-01740-1. https://doi.org/10.1007/s42979-023-01740-1

Krizhevsky, A.; Sutskever, I.; Hinton, G. E. ImageNet classification with deep convolutional neural networks.Communications of the ACM. 2017, 60(6), 84-90. https://doi.org/10.1145/3065386

He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, 770-778. https://doi.org/10.1109/CVPR.2016.90

Li, J.; Chen, X.; Hovy, E.; Jurafsky, D. Visualizing and understanding neural models in NLP. 2015. arXiv preprint arXiv:1506.01066.https://doi.org/10.48550/arXiv.1506.01066

Cambria, E.; White, B. Jumping NLP curves: A review of natural language processing research.IEEE Computational intelligence magazine. 2014, 9(2), 48-57.https://ieeexplore.ieee.org/abstract/document/6786458 https://doi.org/10.1109/MCI.2014.2307227

Strubell, E.; Ganesh, A.; McCallum, A. Energy and policy considerations for modern deep learning research. Proceedings of the AAAI conference on artificial intelligence. 2020, 34(09), 13693-13696. https://doi.org/10.1609/aaai.v34i09.7123

Hu, Y. C. T.; Kung, B. H.; Tan, D. S., et al. Naturalistic physical adversarial patch for object detectors. Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021, 7848-7857. https://doi.org/10.1109/ICCV48922.2021.00775

Sharif, M.; Bhagavatula, S.; Bauer, L.; Reiter, M. K. A general framework for adversarial examples with objectives.ACM Transactions on Privacy and Security (TOPS). 2019, 22(3), 1-30. https://doi.org/10.1145/3317611

Xiao, Z.; Gao, X.; Fu, C.; et al. Improving transferability of adversarial patches on face recognition with generative models. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021, 11845-11854. https://doi.org/10.1109/CVPR46437.2021.01167

Kurakin, A.; Goodfellow, I. J.; Bengio, S. Adversarial examples in the physical world. In Artificial intelligence safety and security, 2018. https://doi.org/10.1201/9781351251389-8

Evtimov, I.; Eykholt, K.; Fernandes, E.; Kohno, T.; Li, B.; Prakash, A.; Rahmati, A.; Song, D. Robust physical-world attacks on machine learning models. arXiv preprint arXiv:1707.08945. 2017, 2(3), 4.

Athalye, A.; Engstrom, L.; Ilyas, A., et al. Synthesizing robust adversarial examples[C]. International conference on machine learning. PMLR, 2018, 284-293.

Zheng, S.; Song, Y.; Leung, T.; et al. Improving the robustness of deep neural networks via stability training[C]. Proceedings of the ieee conference on computer vision and pattern recognition. 2016, 4480-4488. https://www.cv-foundation.org/openaccess. https://doi.org/10.1109/CVPR.2016.485

Kurakin, A.; Goodfellow, I. J.; Bengio, S. Adversarial examples in the physical world[M]//Artificial intelligence safety and security. Chapman and Hall/CRC, 2018, 99-112. https://doi.org/10.1201/9781351251389-8

Madry A. Towards deep learning models resistant to adversarial attacks[J]. arXiv preprint arXiv:1706.06083, 2017.

Wei, X.; Guo, Y.; Yu, J. Adversarial sticker: A stealthy attack method in the physical world. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2022, 45(3), 2711-2725. https://doi.org/10.1109/ TPAMI.2022.3176760.

Wei, X.; Guo, Y.; Yu, J., et al. Simultaneously optimizing perturbations and positions for black-box adversarial patch attacks[J]. IEEE transactions on pattern analysis and machine intelligence, 2022, 45(7), 9041-9054. https://doi.org/10.1109/TPAMI.2022.3231886

Wei, X.; Yan, H.; Li, B. Sparse black-box video attack with reinforcement learning. International Journal of Computer Vision. 2022, 130(6), 1459-1473. https://link.springer.com/article/10.1007/s11263-022-01604-w. https://doi.org/10.1007/s11263-022-01604-w

Goodfellow, I. J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples[J]. arXiv preprint arXiv:1412.6572, 2014. https://doi.org/10.48550/arXiv.1412.6572.

Girshick, R.; Donahue, J.; Darrell, T., et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. Proceedings of the IEEE conference on computer vision and pattern recognition. 2014, 580-587. https://doi.org/10.1109/CVPR.2014.81

Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y. N. Convolutional sequence to sequence learning. In International conference on machine learning, PMLR; 2017.

Graves, A.; Mohamed, A.; Hinton, G. Speech recognition with deep recurrent neural networks. IEEE international conference on acoustics, 2013, 6645-6649. https://doi.org/10.1109/ICASSP.2013.6638947

McCauley, R. N.; Henrich, J. Susceptibility to the Müller-Lyer illusion, theory-neutral observation, and the diachronic penetrability of the visual input system. Philosophical Psychology. 2006, 19(1), 79-101. https://doi.org/10.1186/s13229-017-0127-y

Szegedy C. Intriguing properties of neural networks[J]. arXiv preprint arXiv:1312.6199, 2013.

Le, Q. V. Building high-level features using large scale unsupervised learning. international conference on acoustics, speech and signal processing. IEEE, 2013, 8595-8598. https://doi.org/10.1109/ICASSP.2013.6639343

Papernot, N.; McDaniel, P.; Goodfellow, I. Jha, S.; Celik, Z. B.; Swami, A. Practical black-box attacks against deep learning systems using adversarial examples. arXiv preprint arXiv:1602.02697. 2016, 1(2), 3. https://doi.org/10.1145/3052973.3053009

Lu. J.; Sibai, H.; Fabry, E. Adversarial examples that fool detectors[J]. arXiv preprint arXiv:1712.02494, 2017. https://doi.org/10.48550/arXiv.1712.02494.

Duan, R.; Ma, X.; Wang, Y.; Bailey, J.; Qin, A. K.; Yang, Y. Adversarial camouflage: Hiding physical-world attacks with natural styles. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020. https://doi.org/10.1109/CVPR42600.2020.00108

Lu, J.; Sibai, H.; Fabry, E., et al. No need to worry about adversarial examples in object detection in autonomous vehicles[J]. arXiv preprint arXiv:1707.03501, 2017.

Kurakin, A. Goodfellow I, Bengio S. Adversarial machine learning at scale[J]. arXiv preprint arXiv:1611.01236, 2016.

Tramèr F, Kurakin A, Papernot N, et al. Ensemble adversarial training: Attacks and defenses[J]. arXiv preprint arXiv:1705.07204, 2017.

Chen, X.; Li, X.; Zhou, Y., et al. DDDM: a Brain-Inspired Framework for Robust Classification[J]. arXiv preprint arXiv:2205.10117, 2022. https://doi.org/10.24963/ijcai.2022/397

Qu.; J. Multi-Task in Autonomous Driving through RDNet18-CA with LiSHTL-S Loss Function.ECTI Transactions on Computer and Information Technology (ECTI-CIT). 2024, 18(2), 158-173. https://doi.org/ 10.37936/ecti-cit.2024182.254780.